Neural Network

Project Description

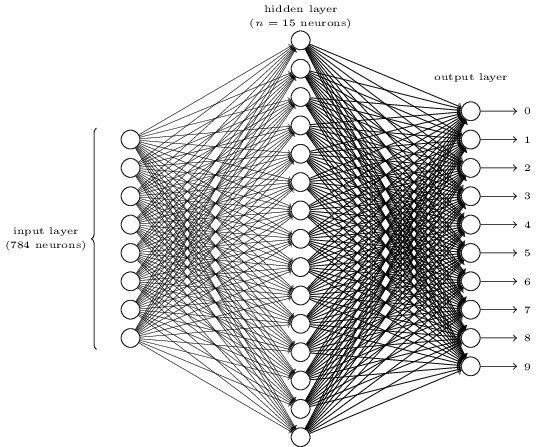

This project demonstrates my implementation of neural networks from first principles. In 2016, I developed a neural network from scratch using C++ standard library components. The implementation was validated by successfully training on the MNIST dataset and achieving accurate digit recognition on the test set.

Gradient Descent

Neural networks optimize performance by minimizing an error function that measures the difference between predicted and desired outputs. Gradient descent achieves this by calculating partial derivatives of the error function with respect to each network parameter (weights and biases). These derivatives indicate the direction and magnitude of parameter adjustments needed to reduce error.

The computational challenge lies in the network's composite structure, where the error function depends on the outputs of all neurons, which are themselves functions of their inputs. This creates a complex chain of dependencies requiring careful calculation of derivatives through backpropagation. The algorithm iteratively adjusts parameters in small increments to avoid overshooting optimal values, repeating this process for each training example to gradually minimize overall error.